On withdrawing “Ivermectin and the odds of hospitalization due to COVID-19,” by Merino et al.

4 February 2022

Preamble by Philip N. Cohen, director of SocArXiv

SocArXiv’s steering committee has decided to withdraw the paper, “Ivermectin and the odds of hospitalization due to COVID-19: evidence from a quasi-experimental analysis based on a public intervention in Mexico City,” by Jose Merino, Victor Hugo Borja, Oliva Lopez, José Alfredo Ochoa, Eduardo Clark, Lila Petersen, and Saul Caballero. [10.31235/osf.io/r93g4]

The paper is a report on a program in Mexico City that gave people medical kits when they tested positive for COVID-19, containing, among other things, ivermectin tablets. The conclusion of the paper is, “The study supports ivermectin-based interventions to assuage the effects of the COVID-19 pandemic on the health system.”

The lead author of the paper, José Merino, head of the Digital Agency for Public Innovation (DAPI), a government agency in Mexico City, tweeted about the paper: “Es una GRAN noticia poder validar una política pública que permitió reducir impactos en salud por covid19” (translation: “It is GREAT news to be able to validate a public policy that allowed reducing health impacts from covid19”). The other authors are officials at the Mexican Social Security Institute and the Mexico City Ministry of Health, and employees at the DAPI.

We have written about this paper previously. We wrote, in part:

“Depending on which critique you prefer, the paper is either very poor quality or else deliberately false and misleading. PolitiFact debunked it here, partly based on this factcheck in Portuguese. We do not believe it provides reliable or useful information, and we are disappointed that it has been very popular (downloaded almost 10,000 times so far). … We do not have a policy to remove papers like this from our service, which meet submission criteria when we post them but turn out to be harmful. However, we could develop one, such as a petition process or some other review trigger. This is an open discussion.”

The paper has now been downloaded more than 11,000 times, among our most-read papers of the past year. Since we posted that statement, the paper has received more attention. In particular, an article in Animal Politico in Mexico reported that the government of Mexico City has spent hundreds of thousands of dollars on ivermectin, which it still distributes (as of January 2022) to people who test positive for COVID-19. In response, University of California-San Diego sociology professor Juan Pablo Pardo-Guerra posted an appeal to SocArXiv asking us to remove the “deeply problematic and unethical” paper and ban its authors from our platform. The appeal, in a widely shared Twitter thread, argued that the authors, through their agency dispensing the medication, unethically recruited experimental subjects, apparently without informed consent, and thus the study is an unethical study; they did not declare a conflict of interest, although they are employees of agencies that carried out the policy. The thread was shared or liked by thousands of people. The article and response to the article prompted us to revisit this paper. On February 1, I promised to bring the issue to our Steering Committee for further discussion.

I am not a medical researcher, although I am a social scientist reasonably well-versed in public health research. I won’t provide a scholarly review of research on ivermectin. However, it is clear from the record of authoritative statements by global and national public health agencies that, at present, ivermectin should not be used as a treatment or preventative for COVID-19 outside of carefully controlled clinical studies, which this clearly was not. These are some of those statements, reflecting current guidance as of 3 February 2022.

- World Health Organization: “We recommend not to use ivermectin, except in the context of a clinical trial.”

- US Centers for Disease Control and Prevention: “ivermectin has not been proven as a way to prevent or treat COVID-19.”

- US National Institutes of Health: “There is insufficient evidence for the COVID-19 Treatment Guidelines Panel (the Panel) to recommend either for or against the use of ivermectin for the treatment of COVID-19.”

- European Medicines Agency: “use of ivermectin for prevention or treatment of COVID-19 cannot currently be recommended outside controlled clinical trials.”

- US Food and Drug Administration: “The FDA has not authorized or approved ivermectin for use in preventing or treating COVID-19 in humans or animals. … Currently available data do not show ivermectin is effective against COVID-19.”

For reference, the scientific flaws in the paper are enumerated at the links above from PolitiFact, partly based on this factcheck from Estado in Portuguese, which included expert consultation. I also found this thread from Omar Yaxmehen Bello-Chavolla useful.

In light of this review, a program to publicly distribute ivermectin to people infected with COVID-19, outside of a controlled study, seems unethical. The paper is part of such a program, and currently serves as part of its justification.

To summarize, there remains insufficient evidence that ivermectin is effective in treating COVID-19; the study is of minimal scientific value at best; the paper is part of an unethical program by the government of Mexico City to dispense hundreds of thousands of doses of an inappropriate medication to people who were sick with COVID-19, which possibly continues to the present; the authors of the paper have promoted it as evidence that their medical intervention is effective. This review is intended to help the SocArXiv Steering Committee reach a decision on the request to remove the paper (we set aside the question of banning the authors from future submissions, which is reserved for people who repeatedly violate our rules). The statement below followed from this review.

SocArXiv Steering Committee statement on withdrawing the paper by Merino et al. (10.31235/osf.io/r93g4).

This is the first time we have used our prerogative as service administrators to withdraw a paper from SocArXiv. Although we reject many papers, according to our moderation policy, we don’t have a policy for unilaterally withdrawing papers after they have been posted. We don’t want to make policy around a single case, but we do want to respond to this situation.

We are withdrawing the paper, and replacing it with a “tombstone” that includes the paper’s metadata. We are doing this to prevent the paper from causing additional harm, and taking this incident as an impetus to develop a more comprehensive policy for future situations. The metadata will serve as a reference for people who follow citations to the paper to our site.

Our grounds for this decision are several:

- The paper is spreading misinformation, promoting an unproved medical treatment in the midst of a global pandemic.

- The paper is part of, and justification for, a government program that unethically dispenses (or did dispense) unproven medication apparently without proper consent or appropriate ethical protections according to the standards of human subjects research.

- The paper is medical research – purporting to study the effects of a medication on a disease outcome – and is not properly within the subject scope of SocArXiv.

- The authors did not properly disclose their conflicts of interest.

We appreciate that of the thousands of papers we have accepted and now host on our platform, there may be others that have serious flaws as well.

We are taking this unprecedented action because this particular bad paper appears to be more important, and therefore potentially more harmful, than other flawed work. In administering SocArXiv, we generally err on the side of inclusivity, and do not provide peer review or substantive vetting of the papers we host. Taking such an approach suits us philosophically, and also practically, since we don’t have staff to review every paper fully. But this approach comes with the responsibility to respond when something truly harmful gets through. In light of demonstrable harms like those associated with this paper, and in response to a community groundswell beseeching us to act, we are withdrawing this paper.

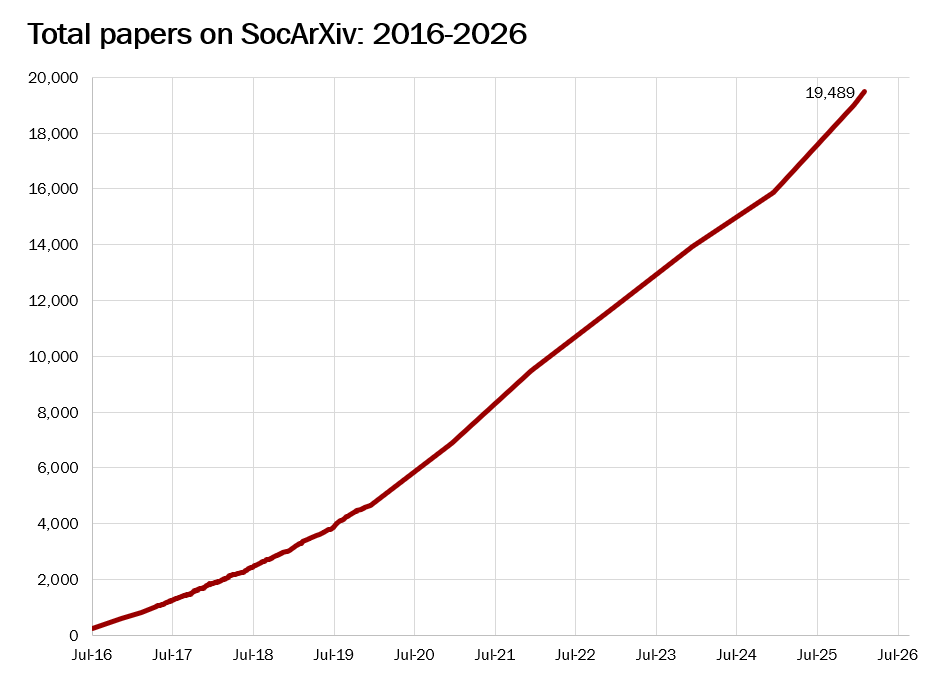

We reiterate that our moderation process does not involve peer review, or substantive evaluation, of the research papers that we host. Our moderation policy confirms only that papers are (1) scholarly, (2) in research areas that we support, (3) are plausibly categorized, (4) are correctly attributed, (5) are in languages that we moderate, and (6) are in text-searchable formats. Posting a paper on SocArXiv is not in itself an indication of good quality – but it is often a sign that researchers are acting in good faith and practicing open scholarship for the public good. We urge readers to consider this incident in the context of the greater good that open science and preprints in general, and our service in particular, do for researchers and the communities they serve.

We welcome comments and suggestions from readers, researchers, and the public. Feel free to email us at socarxiv@gmail.com, or contact us on our social media accounts at Twitter or Facebook.